Security on the Web is undeniably important, both with one’s personal computers and the websites stored on hosting servers. I have dealt with a variety of hacker intrusion issues over the years, and most have been of the SQL Injection or email malware type. Just recently one of my clients acquired a trojan which resulted in Google’s hacking suspected notice in their site links.

As a web developer I do my best to educate my clients on website safety, and take measures to ensure that every site I work on is as safe as possible … I note “as possible” here since the strategies used by hackers, and the exploit types and techniques utilized have evolved over the years, which is why the better CMS platforms (Joomla, WordPress, and Drupal) all provide regular version updates which always address security fixes.

If you do have a CMS site, then you are likely familiar with the term “SQL Injection”. A SQL injection is when “hacker” code is inserted into a SQL database in some fashion to exploit a security vulnerability. To prevent problems all CMS websites need to use a plugin/s that provide a good firewall, virus/malware scans, and other measures to shore up the site security against hacker bots and individuals. In addition, it is important to have site files and database routinely backed up just in case.

Ah, but my goal today is not to talk about CMS security since there are lots of articles written about this. I am writing today since not long back I discovered that Google had added a “This site may not be safe” notice in its search result listings for one of my client sites. Needless to say I was concerned, yet surprised as well since the website is a static one (not a CMS).

If you have a static website you may think that your site is safe from hacking. It is true that a static site is generally much safer than a dynamic site. If you are unfamiliar with the terms “static” and “dynamic”, static refers to a website that doesn’t dynamically build its pages (what I often refer to as “Standard” websites). Dynamic websites, also known as Content Management Systems (CMS), use a templating system to render the pages (the page parts are assembled upon page load), and all text content is stored within a database.

If this happens to your static website, and your not an experienced web developer, then you are most likely at a loss on how to get things back to normal … which of course is my purpose today, to share what I did to get my client’s site safe again.

Okay, so the Google message goes on to read:

Unfortunately, it appears that your site has been hacked.

A hacker may have modified existing pages or added spam content to your site. You may not be able to easily see these problems if the hacker has configured your server to only show the spam content to certain visitors. To protect visitors to your site, Google’s search results may label your site’s pages as hacked. We may also show an older, clean version of your site.

Our recommended steps

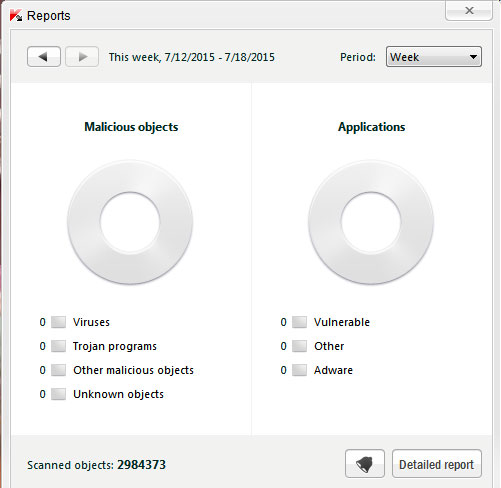

1. Run a virus scan on the computer you access your website from:

This is just a precaution in case someone has acquired your server information using a Trojan, keylogger, etc. If you run regular scans already then you are likely safe and can ignore this step.

2. Download your site files (and database/s if applicable):

By FTP, or hosting file utility manager, download all of your current site files/folders/images in your main directory (public_html, www, etc) and place them in their own folder separate from any backup files you have of your website. Next if your site has one or more databases you’ll want to access the hosting admin area and download the database/s (use PHPMyAdmin, Backup Wizard, etc). A static site won’t use a database, but if you have dynamic add-on scripts, such as calendars, banner ads, etc, then they will often require a database so check on this if unsure.

After you have the files and database use your “quality” virus-scan program and scan each. For our site both of these came up clean (and safe) since the scripting used was not of the typical type that is easily flagged.

3. Create a Webmaster Tools account:

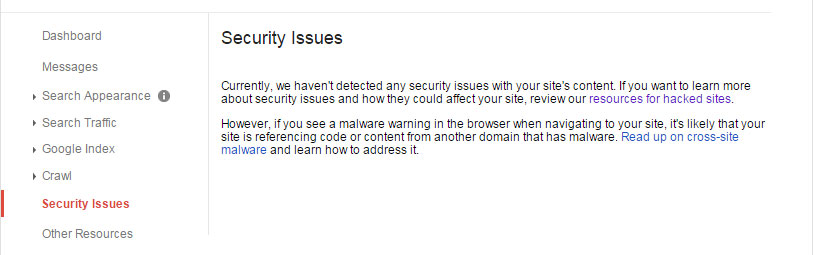

Google’s Webmaster Tools can benefit a website in many ways, one of which is performing a security scan. If you don’t yet have an account, you need to visit this link https://www.google.com/webmasters/tools/ and sign in with your Google access information (all Google properties use the same email/password access). After which you’ll need to verify ownership of the website by uploading a script they provide to your hosting account’s public files area.

Once you sign in to Webmaster Tools check the section called “Security Issues” near the bottom of the links list at left. If there are problems you will see a link to the URL/s of issue, which will often be named “pharmacy.html” or similar. The goal of the hacker/spammers is to generate inbound links to their fraudulent site to increase its SEO value. Note that if there is a URL suspected of being hacker/spammer generated then there will be a link to “request a review”.

Okay, so Google notes that one should read their resources for hacked sites for detailed information on how to fix your site at https://www.google.com/intl/en/webmasters/hacked/. On this page they provide a variety of links and videos on the steps to take for site recovery. It is important to note though that the message “This site may harm your computer” is very different from the message “This site may be hacked”. The first notice is noting that there is a script in place that will attempt to cause malicious harm, while the second notice (the one my client had) is noting that a script or scripts has been used to create spam pages. These pages will not harm one’s computer in any way, and in most cases a visitor to the site will never see them since the existing site is not altered in any way.

I do think it is a good idea to view their video and links pages, yet most only apply to a website with a database, which are much more difficult to clean.

4. Compare site files and look for changes:

At this point you will want to compare your current site files (the ones you just downloaded) with your backup copy of your files (which should be safe from intrusion) and look for any differences. For my client site I found a variety of differences, which included an .htm file (I use the .html extension only), a good number of .php files, and what really surprised me, they had edited my .htaccess file (the one in the public html folder, not the root one). For each it is simply a matter of deleting them by FTP. I then opened up all the folders, and checked all the files by comparing files size just to ensure that no “extra” code was added. These were all the same as my other versions, so safe.

As for the specific exploit used, my virus-scan identified it as a trojan script known as “Backdoor.PHP.RST.bl”. I located a variety of files added (readme.phtml, rss.php, contact.php, general65.php, javascript4.php, search.php, etc), and in varied folder locations (so be sure to check all sub-folders).

5. Delete web-mail on server, stored mail, and change password:

If you use your hosting accounts mail server you will either being using Outlook (where mail is stored locally on your computer) or you will be accessing the web-mail from the hosting server itself. The thing to understand is that many spammers will add links in mail that if clicked upon activates a script that can infect the mail server. I have had a couple of client sites infected this way, and in extreme cases (where protected system files have been compromised) the easiest solution is to have the hosting account reset (deleted and reinstalled) to get everything back to server defaults. What you need to understand is that it is best to keep one’s web-mail separate from their website server. My suggestion is to use Google Email for Business, or GoDaddy’s Webmail (assuming you already have your domains and hosting with them). At a minimum, delete the email accounts, recreate, and choose a good password (should include lower and uppercase characters, numbers, and symbols).

6. Change the hosting server access password:

Most hosting companies will allow you to change your password from your control panel (cpanel, plesk, etc). I suggest doing this as a “just in case” precaution, and ensure that the password is a good one (see above).

7. Request a Review from Google:

As I noted in the 3rd step above, Google will provide a link to request a review within the security section of Webmaster tools. Once you’re confident that your site no longer has issues then you want to click this link, and explain in the comments section what you’ve done to fix the issue. Google notes that once they determine your site is fixed, they will remove the hacked label.You can also visit Google’s support page about this, the direct link is: https://support.google.com/webmasters/answer/2600725?hl=en

8: Add a Sitemap:

If you don’t yet have a sitemap, then I would suggest adding one. For a static website you can crate one at https://www.xml-sitemaps.com/ The sitemap will help ensure that Google not only indexes all your pages, but it will prompt them to do this, which is important since the sooner they remove the “This site may be hacked” message from your site’s search listings, the better. With luck it should only take a day or two though.

9. Add a robots.txt file:

A robots text file is similar to what the metatags in the source code provide. For example, most websites will include a metatag for robots as follows:

<meta name=”robots” content=”index, follow” />

This tells the search engines to index the page, and to follow the links.

<meta name=”robots” content=”index, nofollow” />

This tells the search engines to index the page, and not to follow the links.

<meta name=”robots” content=”noindex, nofollow” />

This tells the search engines to not index the page, and not to follow the links.

With the robots.txt file you can prevent access to specific folders or files on your site by using user-agent statements, such as:

User-agent: *

Disallow: /secure/

You can learn more about the options here: http://www.robotstxt.org/robotstxt.html

10: Block access with the .htaccess file.

Most Linux (Apache) websites will include an .htaccess file. This file lets you control the behavior of your site or a specific directory on your site by controlling access, by providing URL redirects and rewriting, cache management, and other features to define how a visitor interacts with your website. An .htaccess file can help protect your site in the way that the robots.txt file did before, yet it can also be used to secure your website from “bad” bots (who are the main cause of all hacker issues) by adding a black list for spammers. Our suggestion is the “Ultimate htaccess Blacklist from Perishable Press”. You can view their page at: https://perishablepress.com/ultimate-htaccess-blacklist/

It is our hope that your website remains safe from harm. If you need help please feel free to contact us, and where possible we will gladly provide free advice.